Iterators and Joins

Introduction #

As you may have seen already, some SQL queries involve joining lots of tables together to get the data we need. However, that joining comes at a cost- every join multiplies the number of rows in the output by the number of rows in the table!

For this reason, it’s very important that we try to optimize the join operation as much as possible, such that we can minimize the amount of data to process. In this section, we’ll explore a methods of doing this, and compare their runtimes.

Tip

I would recommend playing around with the Loop Join Animations visualizer I made- it will help provide some intuition for the first few joins since staring at an algorithm isn’t for everyone!

The discussion slides also have a full walkthrough of the more involved joins (SMJ, GHJ).

Relevant Materials #

Cost Notation #

Make sure you keep this section around (whether it’s in your head, or bookmarked)! It’ll be extremely useful for this section.

Suppose is a table.

- is the number of pages needed to store .

- is the number of records per page of .

- is the number of records in , also known as the cardinality of .

- .

Simple Nested Loop Join #

Intuitively, joining two tables is essentially a double for loop over the records in each table:

for record r in R:

for record s in S:

if join_condition(r, s):

add <r, s> to result buffer

where join_condition is an optional function, also known as , that returns a boolean (true if record should be added to result).

The cost of a simple join is the cost of scanning once, added to the cost of scanning once per tuple in :

Page Nested Loop Join #

Simple join is inefficient because it requires an I/O for every individual record for both tables.

We can improve this by operating on the page level rather than the record level: before moving onto the next page, process all of the joins for the records on the current page.

for rpage in R:

for spage in S:

for rtuple in rpage:

for stuple in spage:

if join_condition(rtuple, stuple):

add <r, s> to result buffer

Now, the cost becomes the cost of scanning once, then scanning once per page of :

Block Nested Loop Join #

To improve upon loop join even further, let’s take advantage of the fact that we can have pages in our buffer.

Rather than having to load in one page at a time, we can instead load in:

- page of

- output buffer

- pages of

and then load in each page of one by one to join to all pages of before loading in a new set of pages.

for rblock of B-2 pages in R:

for spage in S:

for rtuple in rblock:

for stuple in sblock:

add <rtuple, stuple> to result buffer

The cost now becomes the cost of scanning once, plus scanning once per number of blocks:

Index Nested Loop Join #

In previous version of nested loop join, we’d need to loop through all of the elements in order to join them.

However, with the power of B+ trees, we can quickly look up tuples that are equivalent in the two tables when computing an equijoin.

for rtuple in R:

add <rtuple, S_index.find(joinval)>

Cost: where is the cost of finding all matching tuples

- Alternative 1 B+Tree: cost to traverse root to leave and read all leaves with matching utples

- Alternative 2/3 B+Tree: cost of retrieving RIDs + cost to fetch actual records

- If clustered, 1 IO per page. If not clustered, 1 IO per tuple.

- If no index, then which devolves INLJ into SNLJ.

Sort-Merge Join #

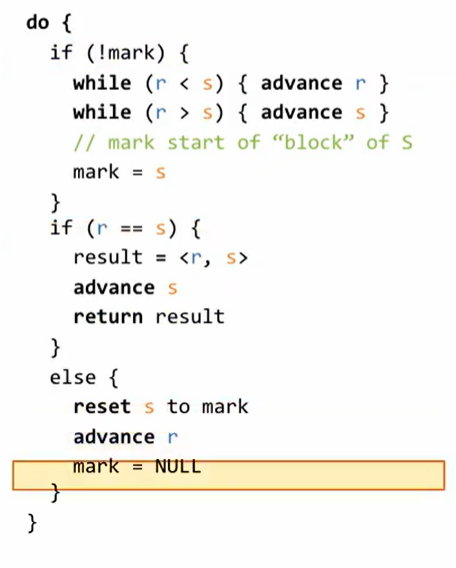

Main idea: When joining on a comparison (like equality or ), sort on the desired indices first, then for every range (group of values with identical indices) check for matches and yield all matches.

The cost of sort-merge join is the sum of:

- The cost of sorting

- The cost of sorting

- The cost of iterating through R once,

- The cost of iterating through S once,

One optimization we can make is to stream both relations directly into the merge part when in the last pass of sorting! This will reduce the I/O cost by removing the need to write and re-read . This subtracts I/Os from the final cost.

Grace Hash Join #

If we have an equality predicate, we can use the power of hashing to match identical indices quickly.

Naively, if we load all records in table into a hash table, we can scan once and probe the hash table for matches- but this requires to be less than where is the hash fill factor.

If the memory requirement of is not satisfied, we will have to partition out and process each group separately.

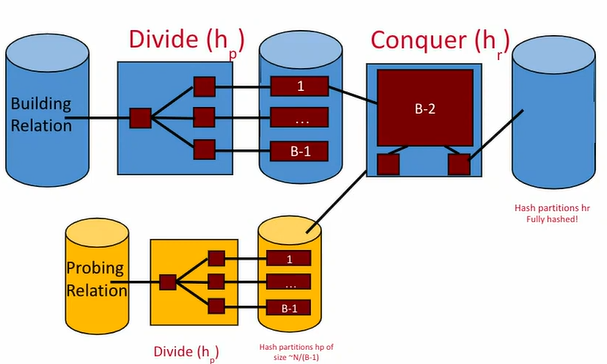

Essentially, Grace Hash Join is very similar to the divide-and-conquer approach for hashing in the first place:

- In the dividing phase, matching tuples between and get put into the same partition.

- In the conquering phase, build a separate small hash table for each partition in memory, and if it matches, stream the partition into the output buffer.

Full process:

- Partitioning step:

- make partitions.

- If any partitions are larger than pages, then recursively partition until they reach the desired size.

- Build and probe:

- Build an in-memory hash table of one table , and stream in tuples of .

- For all matching tuples of and , stream them to the output buffer.

Calculating the I/O Cost of GHJ #

The process of calculating the GHJ cost is extremely similar to that of standard external hashing. The main difference is that we are now loading in two tables at the same time.

Let’s look at the example from Discussion 6:

- Table has 100 pages and 20 tuples per page.

- Table has 50 pages and 50 tuples per page.

- Assume all hash functions partition uniformly.

- Do not include the final writes in the calculation.

- If , what is the I/O cost for Grace Hash Join?

Number of Passes #

Like hashing, our goal is to make the partitions small enough to fit in the buffer. But now that we have two tables, we only need one of them to fit! This is because we can put the smaller table into memory, then stream the larger table in one page at a time using one buffer frame.

As you can see in the image above, as long as one of the tables fits in pages, we’re all set for the Build and Probe stage.

As you can see in the image above, as long as one of the tables fits in pages, we’re all set for the Build and Probe stage.

In each stage, of the Partitioning step, we create partitions, so we solve for the number of recursive passes in the following manner: In this case, is smaller, so we can plug in to confirm that we need passes of partitioning before we can Build and Probe.

Partition Cost #

The partition cost calculation is the same as for hashing. However, we must partition both tables separately using partitions each at each step.

Pass 1:

- The first read takes I/Os.

- Partition into equal partitions of and write it back to disk = I/Os.

- Partition into equal partitions of and write it = I/Os.

Pass 2:

- We read in the results from pass 1: I/Os.

- Partition each of the 7 partitions of 15 into 7 more partitions of , making partitions of size in total. Writing these back takes I/Os.

- Do the same thing for the 7 partitions of 8 to get partitions of size , taking I/Os to write back.

Build and Probe: Building and probing requires reading all of the partitions created in pass 2. This takes $(349) + (249) = 245$ I/Os. Remember that we don’t count the final writes!

Total: I/Os to run GHJ.