Asymptotic Analysis Basics

Content Note

This concept is a big reason why a strong math background is helpful for computer science, even when it’s not obvious that there are connections! Make sure you’re comfortable with Calculus concepts up to power series.

An Abstract Introduction to Asymptotic Analysis #

The term asymptotics, or asymptotic analysis, refers to the idea of analyzing functions when their inputs get really big. This is like the asymptotes you might remember learning in math classes, where functions approach a value when they get very large inputs.

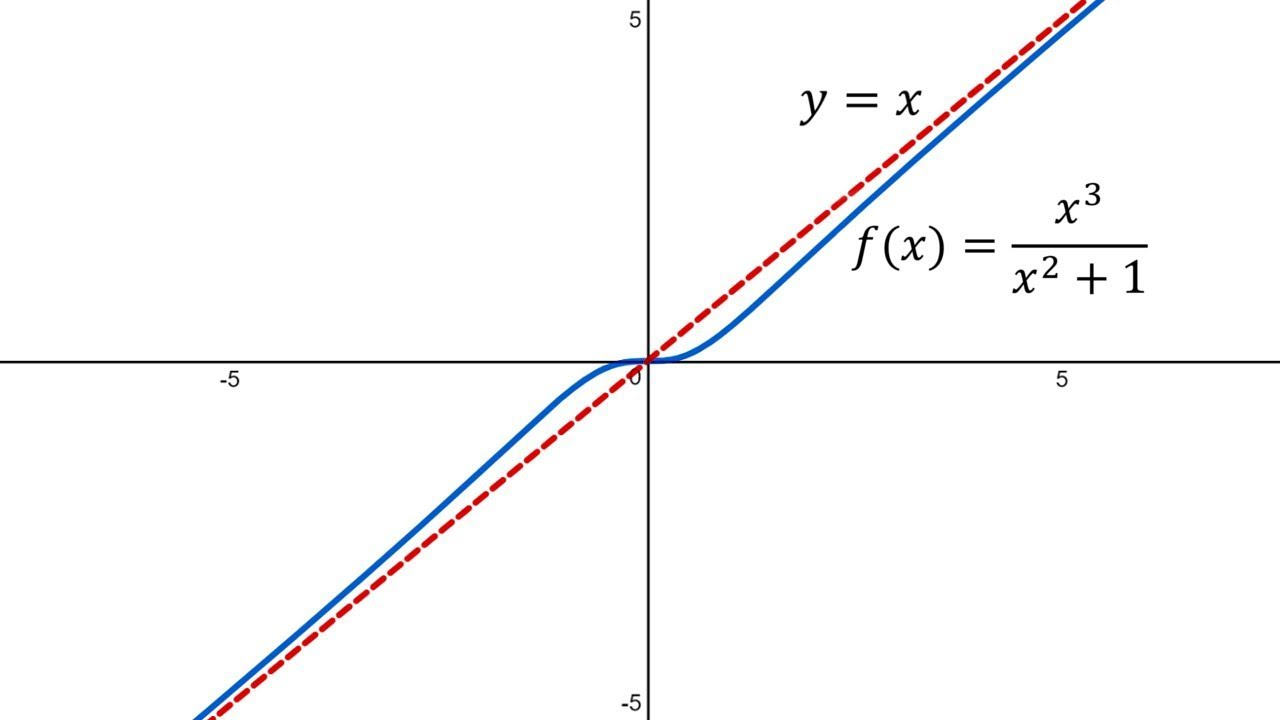

Here, we can see that looks basically identical to when x gets really big. Asymptotics is all about reducing functions to their eventual behaviors exactly like this!

That’s cool, but how is it useful? #

Graphs and functions are great and all, but at this point it’s still a mystery as to how we can use these concepts for more practical uses. Now, we’ll see how we can represent programs as mathematical functions so that we can do cool things like:

- Figure out how much time or space a program will use

- Objectively tell how one program is better than another program

- Choose the optimal data structures for a specific purpose

As you can see, this concept is absolutely fundamental to ensuring that you write efficient algorithms and choose the correct data structures. With the power of asymptotics, you can figure out if a program will take 100 seconds or 100 years to run without actually running it!

How to Measure Programs #

In order to convert your public static void Main(String[] args) or whatever into y = log(x), we need to figure out what x and y even represent!

TLDR: It depends, but the three most common measurements are time, space, and complexity.

Time is almost always useful to minimize because it could mean the difference between a program being able to run on a smartphone and needing a supercomputer. Time usually increases with the number of operations being run. Loops and recursion will increase this metric substantially. On Linux, the time command can be used for measuring this.

Space is also often nice to reduce, but has become a smaller concern now that we can get terabytes (or even petabytes) of storage pretty easily! Usually, the things that take up lots of space are big lists and a very large number of individual objects. Reducing the size of lists to hold only what you need will be very helpful for this metric!

There is another common metric, which is known as complexity or computational cost. This is a less concrete concept compared to time or space, and cannot be measured easily; however, it is highly generalized and usually easier to think about. For complexity, we can simply assign basic operations (like println, adding, absolute value) a complexity of 1 and add up how many basic operation calls there are in a program.

Simplifying Functions #

Since we only care about the general shape of the function, we can keep things as simple as possible! Here are the main rules:

- Only keep the fastest growing term. For example, can be simplified to just since grows faster out of the two terms.

- Remove all constants. For example, can just be simplified to since constants don’t change the overall shape of a function.

- Remove all other variables. If a function is really but we only care about n, then we can simply it into .

There are two cases where we can’t remove other variables and constants though, and they are:

- A polynomial term (because grows slower than , for example), and

- An exponential term (because grows slower than , for example).

The Big Bounds #

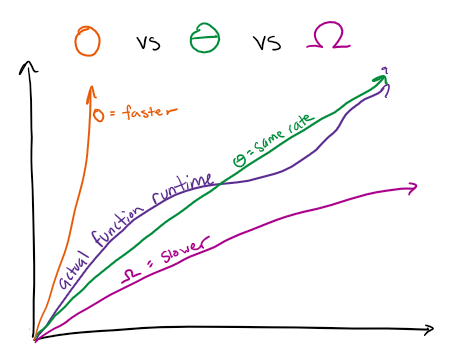

There are three important types of runtime bounds that can be used to describe functions. These bounds put restrictions on how slow or fast we can expect that function to grow!

Big O is an upper bound for a function growth rate. That means that the function grows slower or the same rate as the Big O function. For example, a valid Big O bound for is since grows at a faster rate.

Big Omega is a lower bound for a function growth rate. That means that the function grows faster or the same rate as the Big Omega function. For example, a valid Big Omega bound for is since (a constant) grows at a slower rate.

Big Theta is a middle ground that describes the function that grows at the same rate as the actual function. Big Theta only exists if there is a valid Big O that is equal to a valid Big Omega. For example, a valid Big Theta bound for is since grows at the same rate (log n is much slower so it adds an insignificant amount).

Orders of Growth #

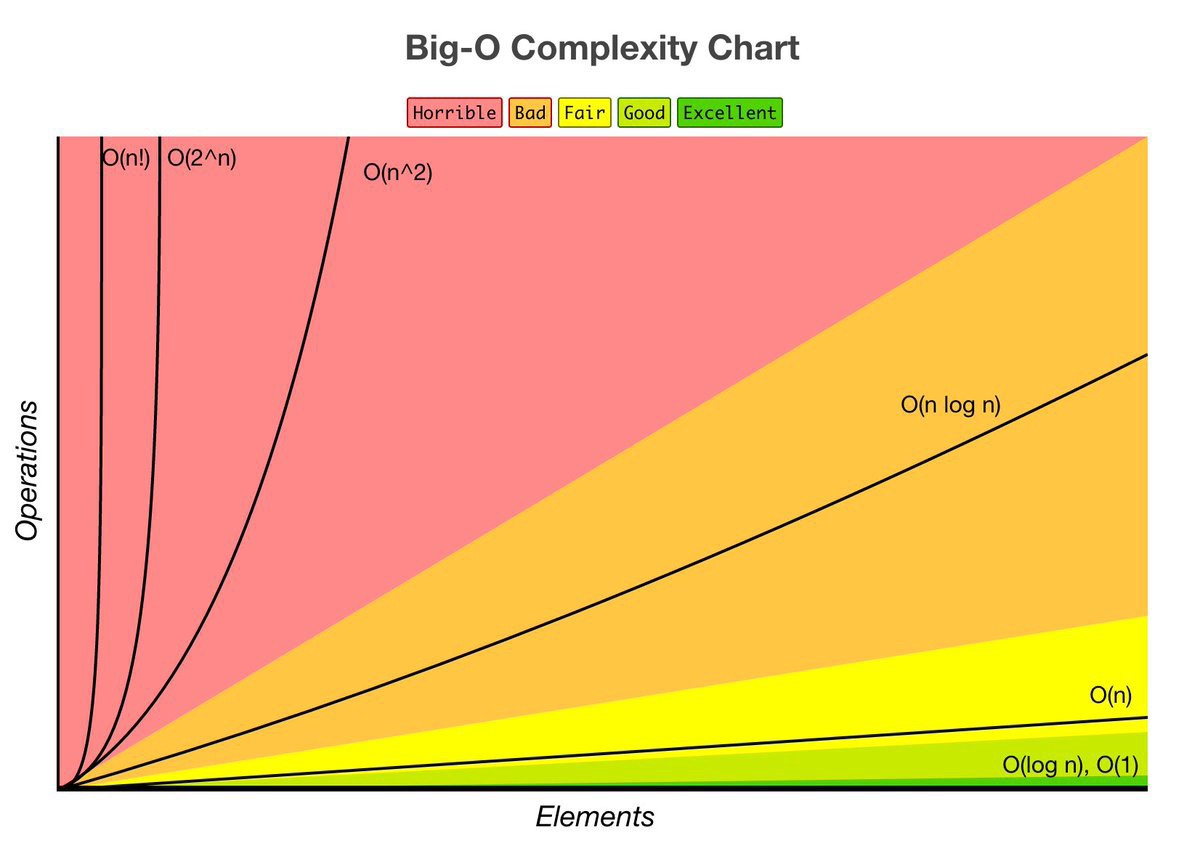

There are some common functions that many runtimes will simply into. Here they are, from fastest to slowest:

| Function | Name | Examples |

|---|---|---|

| Constant | System.out.println, +, array accessing | |

| Log | Binary search | |

| Linear | Iterating through each element of a list | |

| nlogn 😅 | Quicksort, merge sort | |

| Quadratic | Bubble sort, nested for loops | |

| Exponential | Finding all possible subsets of a list, tree recursion | |

| Factorial | Bogo sort, getting all permutations of a list | |

| n^n 😅😅 | Tetration |

Don’t worry about the examples you aren’t familiar with- I will go into much more detail on their respective pages.

Asymptotic Analysis: Step by Step #

- Identify the function that needs to be analyzed.

- Identify the parameter to use as .

- Identify the measurement that needs to be taken. (Time, space, etc.)

- Generate a function that represents the complexity. If you need help with this step,

try some problems!

Asymptotics Practice Content Note > Make sure to review Asymptotic Analysis Basics before proceeding with these problems. Introduction Asymptotics is a very intuition-based concept...

- Simplifythe function (remove constants, smaller terms, and other variables).

Asymptotic Analysis Basics

Content Note > This concept is a big reason why a strong math background is helpful for computer science, even when...

- Select the correct bounds (O, Omega, Theta) for particular cases (best, worst, overall).